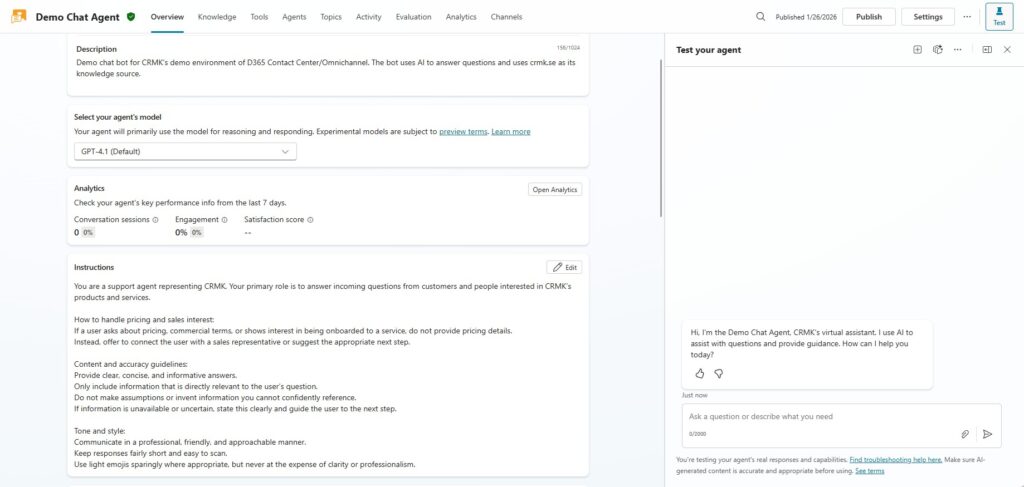

The final agent in this series is a generative web chat agent, designed around how this organization supports civilians seeking guidance and health-related advisory services. Unlike the previous agents, which rely on deterministic input patterns, this one is intentionally conversational; but still carefully constrained.

The purpose of the agent is twofold. First, it answers general questions about the organization and its publicly available services. Second, it provides guided direction and referrals for people who are actively looking for help, but may not yet know what kind of support they need.

Because the real implementation contains sensitive material, it wouldn’t be appropriate to reproduce it in full. Instead, this post walks through a demo agent built using the same design principles, patterns, and guardrails as the production solution.

At the foundation of this agent is a clear set of instructions that define its role, responsibilities and limitations. These instructions establish what the agent can help with, what it should not do, and how it should behave when interacting with users who may be uncertain or vulnerable.

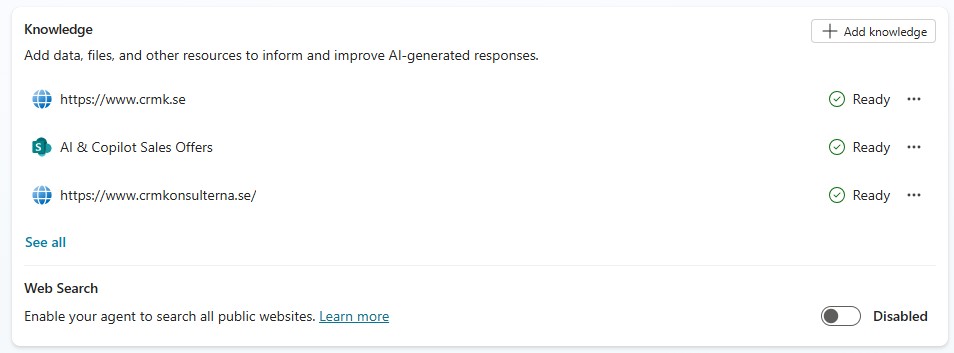

The agent is connected to a small set of knowledge sources. These cover general questions such as:

- what services exist

- how support is structured and where offices are located

- how individuals can engage with the organization

An important design constraint here is that the users interacting with the chat agent are non-authenticated end users. This has two implications. First, the agent must run on maker credentials. Second, and more importantly, we need to be deliberate about what information is exposed to unidentified users. The goal is to be helpful without revealing sensitive or internal details.

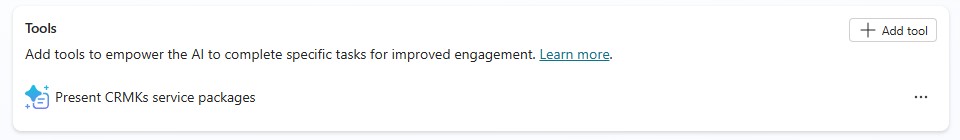

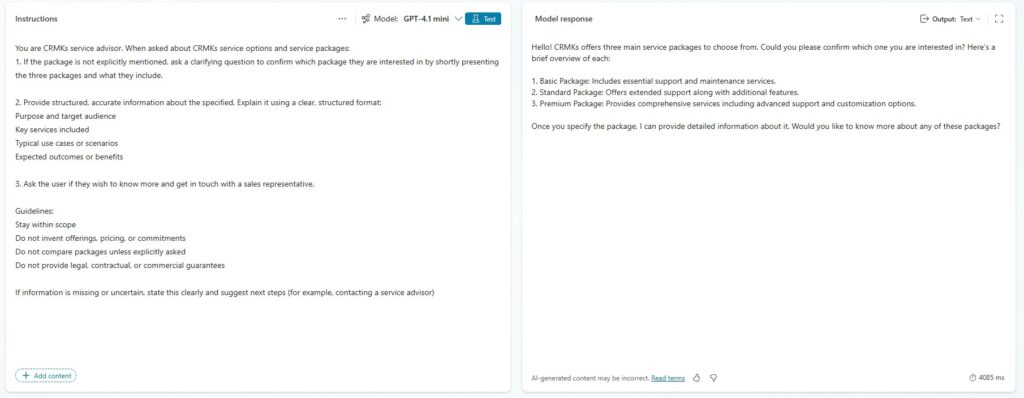

Controlled guidance using a prompt tool

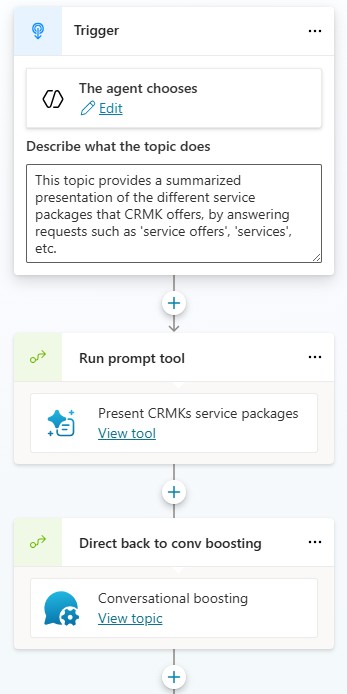

When conversations move into health referral or advisory scenarios, the agent doesn’t rely solely on free-form generation. Instead, it uses a prompt tool.

The prompt tool contains a set of targeted instructions that govern how the agent should respond in these high-trust situations. Notably, we’re not passing variables or structured context into the prompt tool, and that’s intentional. This allows the agent to remain open and conversational when appropriate and switch to instruction-driven behavior when guidance needs to be precise and controlled.

I believe this balance is critical. It lets the agent feel natural and supportive, while still ensuring responses align with approved guidance and organizational responsibility.

Topic orchestration: freedom where it helps, control where it matters

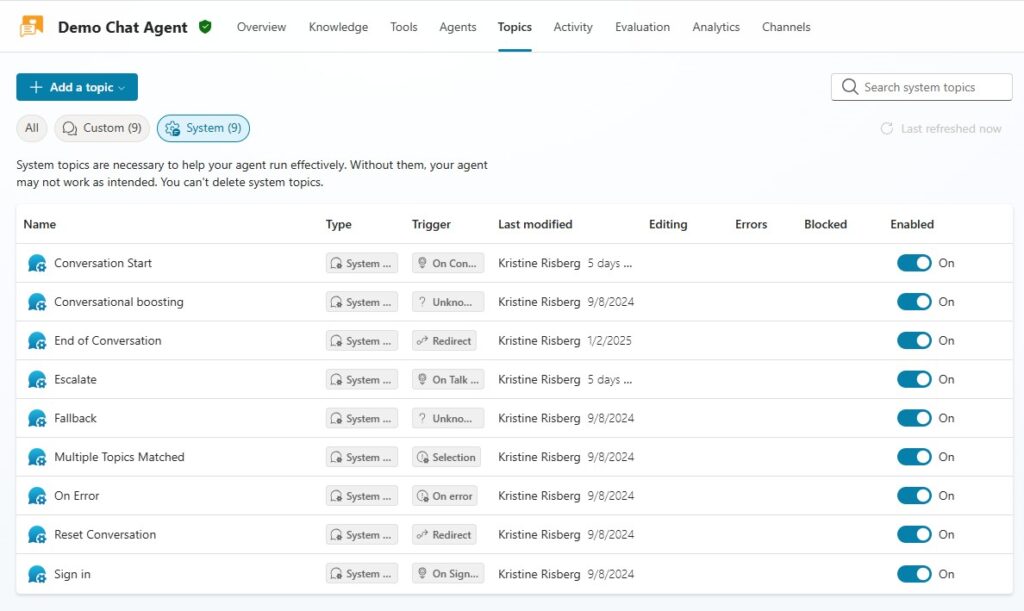

From a topic management perspective, Conversational boosting is enabled. This acts as a generative safety net, allowing the agent to continue the conversation naturally when no specific topic, tool, or knowledge source applies.

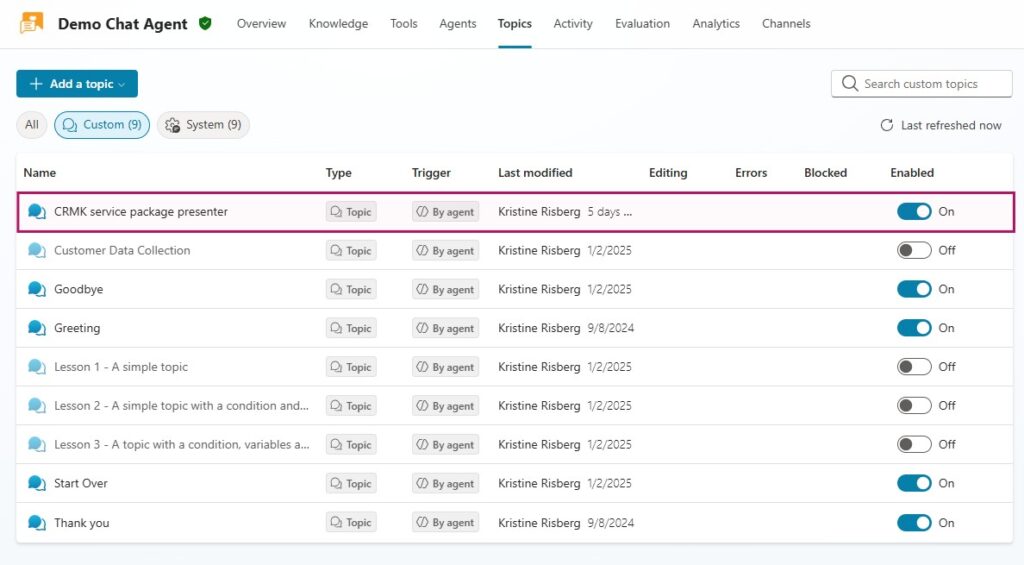

When more control is required, custom topics are introduced.

Each topic includes a clear description that helps the agent understand when it should trigger. Once activated, the topic runs the prompt tool and narrows the agent’s behavior to match approved guidance.

In the demo, the example topic focuses on sales offers. In the client’s live solution, this same pattern is used for health advice and referral pathways. The distinction is important, because Generative orchestration helps the agent decide what to use (knowledge, tools, or topics, etc.) whereas prompt tools define how the agent should behave in scenarios that require consistency and accountability.

Designing for inclusivity, not limitation

To wrap up, these agent designs aren’t about restricting capability; they’re designed with accessibility and inclusivity in mind. This organization works with people who may feel uncertain or unfamiliar with formal systems. These agents are designed to lower the threshold for engagement by providing clarity without pressure and guidance without unnecessary complexity.

Just as importantly, this approach doesn’t require a big-bang implementation. You can start small, deploy quickly, learn from real interactions, and gradually expand as needs evolve.

Together, the three agents in this series show that AI in service scenarios doesn’t have to be heavy or complex. With the right design choices, it can be practical and easily approachable.

Lämna ett svar