In many service and Contact Center scenarios, the value of AI isn’t about building the most advanced assistant; it’s about solving real problems quickly, reliably, and with minimal overhead.

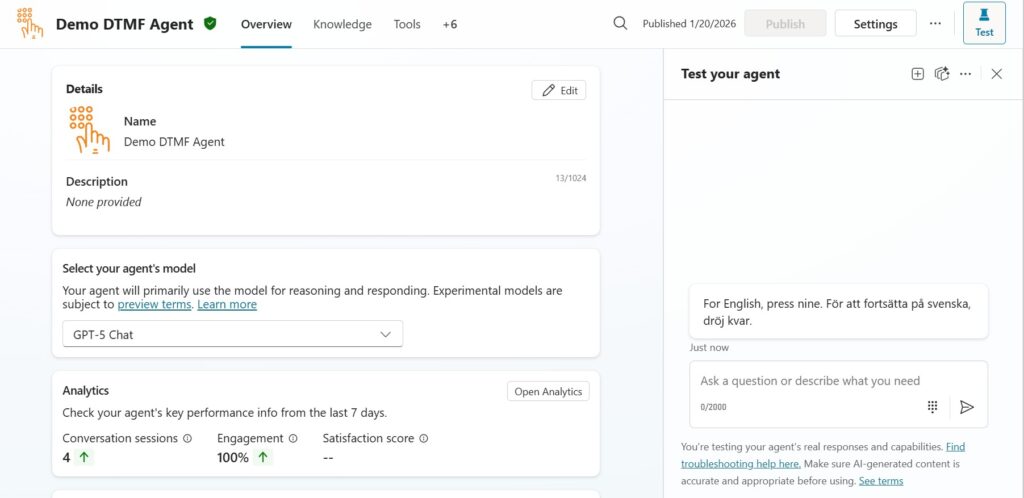

In this blog post, I’ll share a voice call agent I’ve designed and deployed for a nationwide organization working with community outreach and health referral services, and where accessibility, trust, and simplicity matter just as much as automation.

The demo version shown here uses sales and support topics to keep the examples simple and relatable. In the real implementation for the client, the topics are tailored to health advice and referral pathways.

The first agent we’ll look at is a DTMF-based voice agent, built specifically for the voice channel. Instead of relying on open-ended speech input, this agent uses keypad selections; a dial-tone interaction where the caller responds by pressing buttons.

We chose a dial-based approach for one reason: the audience. Many callers are elderly and not always Swedish-born. Some are asylum seekers or individuals without formal documentation, and for some, trust in authorities is low. In this kind of community service scenario, reducing friction matters. By keeping the interaction short, predictable, and language-light, we lower the threshold for getting help without requiring callers to describe their situation in detail.

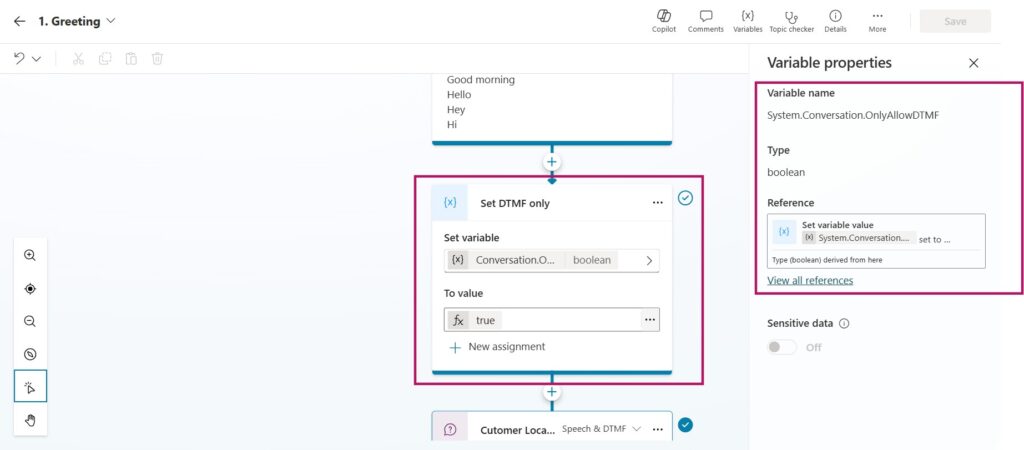

Locking the experience to keypad input

The dialogue begins in the Greeting topic. Here, we immediately set the system-level variable System.Conversation.OnlyAllowDTMF = true

When this is enabled, the voice agent will only accept DTMF input. That means callers can’t accidentally interrupt the flow with speech and background noise won’t confuse the agent or cause unintended routing. For high-traffic or noisy environments, this small setting can make a big difference in stability.

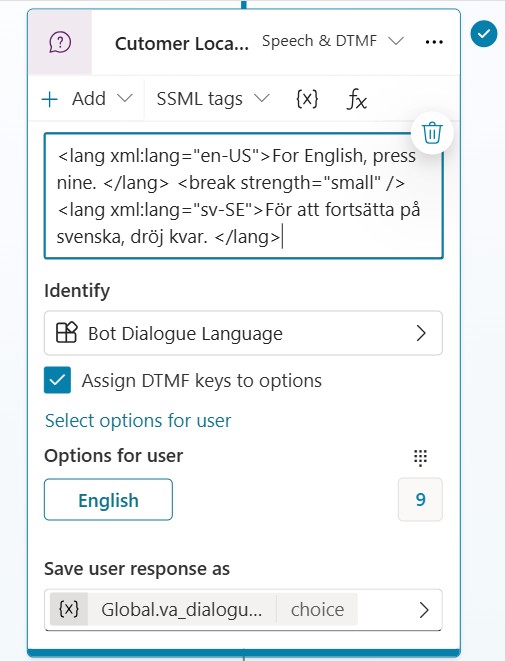

Handling language in a fast (but manual) way

Next, the agent asks an explicit question where two languages are included directly in the same prompt using SSML. This makes the language experience quick to implement, and it works well for a small design.

However, this approach comes with a tradeoff: it doesn’t scale well. If the agent grows in complexity, hardcoding multiple languages in the prompt becomes difficult to maintain. The best approach is to use one logical prompt, in the agent’s base language, and then provide translations via the language file.

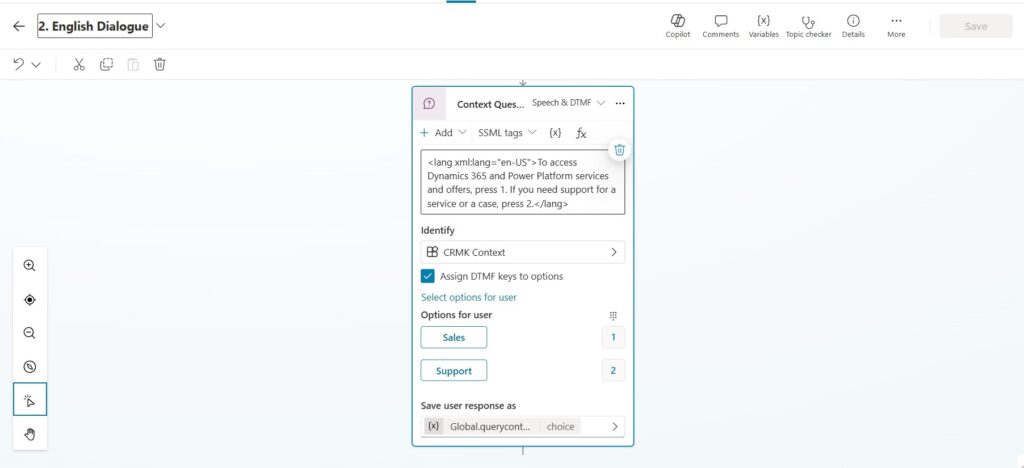

The main dialogue

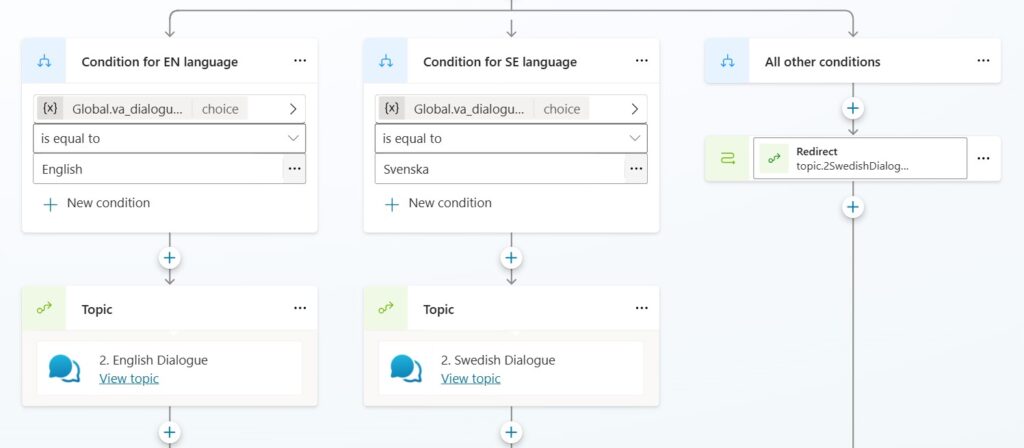

This is a scenario where a fully generative voice agent could work well by letting callers describe their need in natural language and extracting intent dynamically. In this scenario, the caller is prompted with a simple two-option question about the reason for calling.

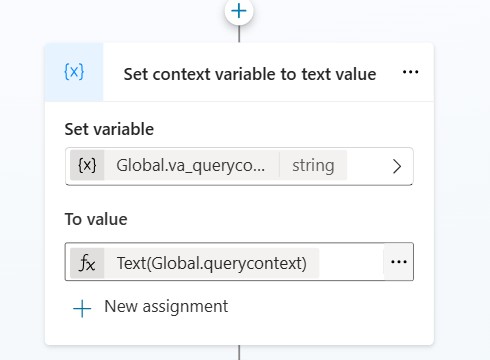

Because the choice is constrained, we can keep the intent capture deterministic, which allows us to do a 1:1 mapping of the caller’s selection to predefined service skills. The caller’s selection is stored in a global variable, querycontext, and converted into a string so it can be passed downstream into routing logic.

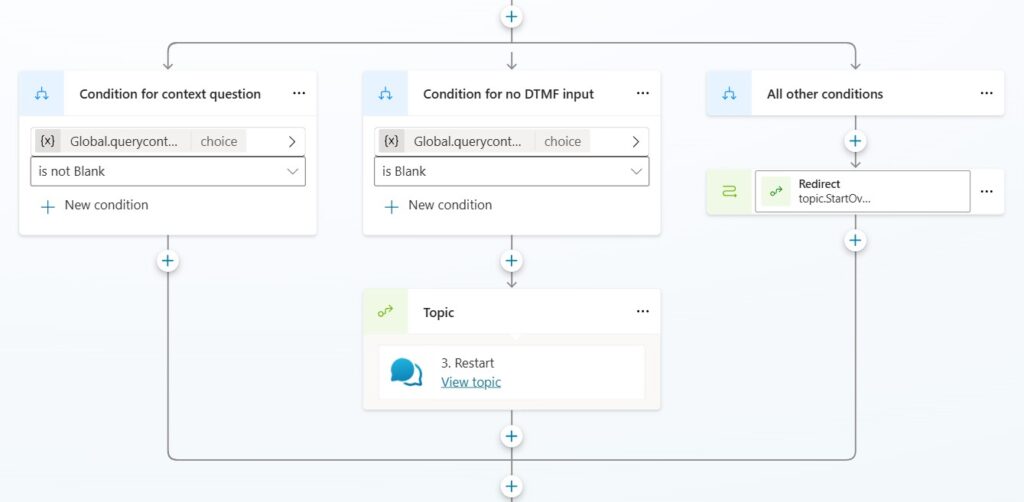

Once the caller has been asked to make a selection, the agent needs to confirm that the input is usable before continuing. This is where a condition block comes in. The caller’s keypad choice is stored in the global variable Global.querycontext. The condition logic then evaluates three possible outcomes:

1) Context question answered (DTMF input received)

The first condition checks whether Global.querycontext is not blank. This is the “happy path”; the caller has pressed one of the expected keys and the agent can safely continue the flow with a known, deterministic intent value.

2) No DTMF input (caller didn’t press anything)

If Global.querycontext is blank; instead of letting the conversation fail or continuing with missing context, the agent routes the caller into a Restart topic.

3) All other conditions (fallback behavior)

Finally, the “all other conditions” path acts as a safety net. If the input is unexpected, invalid, or doesn’t match the logic we anticipated, the agent triggers a redirect back to the start of the flow.

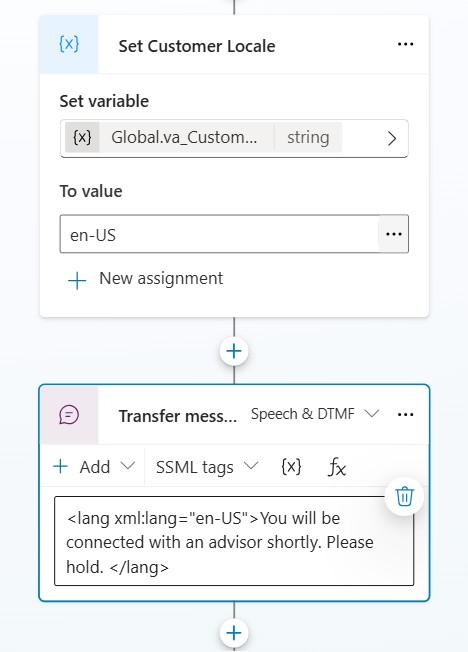

Once we know we have a valid selection, the agent sets the caller’s language locale in a global variable. Even in a DTMF-first design, locale is useful because it creates a consistent signal that can be reused for speech output, but more importantly in this design; passed along into the Contact Center context.

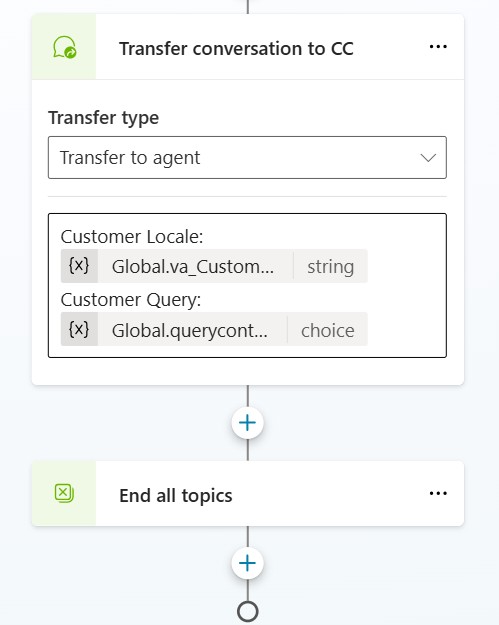

Finally, the agent performs the actual handoff and passes the variables captured, including:

- Customer Locale →

Global.va_CustomerLocale - Customer Query →

Global.querycontext

Transfer to CSR

Mapping Copilot Studio context to Contact Center routing

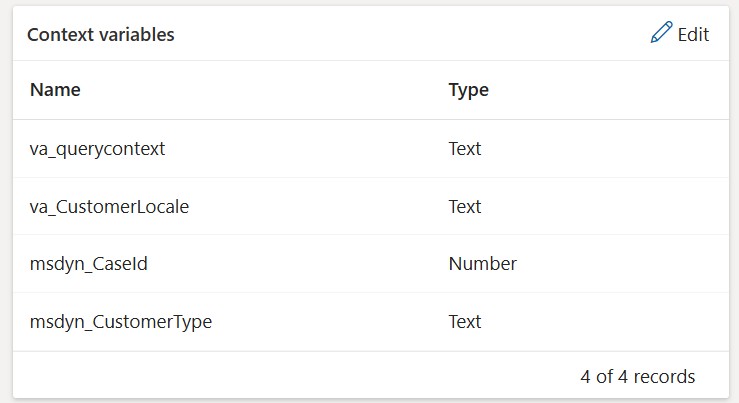

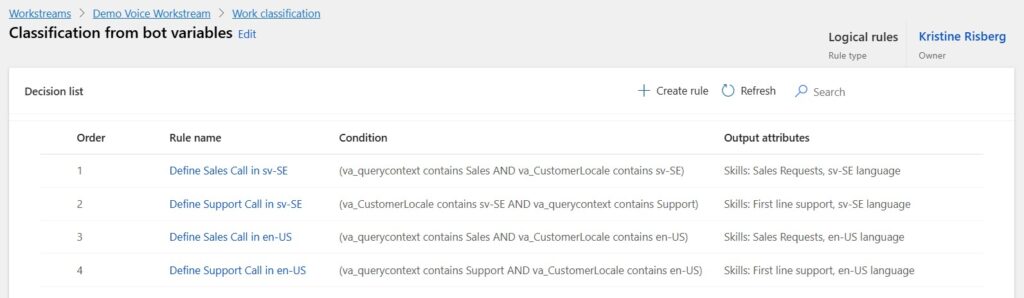

On the Contact Center side, we map Copilot Studio variables to context variables in the voice channel workstream.

This allows the incoming call to be classified against routing attributes (such as skills or categories), so the call is consistently assigned to the right service representative.

The result is a simple but effective pattern: DTMF input → predictable intent → skill-based routing, optimized for accessibility, speed, and operational reliability.

This showcases how Copilot Studio agents can designed for practical deployment, and how you can move from idea to working service capability in a short time, without needing a heavy implementation or months of change management.

Lämna ett svar